On May 16, Apple demonstrated software assistance functions designed for cognitive ability, vision, hearing and physical activity, as well as for people with aphasia or who are at risk of aphasia. An innovative tool designed by the author. These new features take advantage of advanced software and hardware technologies such as on-device machine learning to protect user privacy and continue Apple’s long-standing commitment to making products that everyone can use smoothly.

Apple said that the company develops accessibility features through in-depth cooperation with community organizations representing users with various disabilities to bring real impact to people’s lives. From later this year, cognitively impaired users will be able to use iPhone and iPad more easily independently through Assistive Access; aphasic users will be able to use Live Speech to convert voice output through typing to achieve conversations during calls and conversations; Risky users can use Personal Voice to create a synthetic voice similar to their own voice to communicate with family and friends; for blind or low-vision users, the detection mode of the amplifier provides the Point and Speak function, which can recognize the text pointed by the user and speak aloud Read aloud to help them better understand and use physical objects such as household appliances.

“At Apple, we’ve always believed that technology is best when it works for everyone,” said Apple CEO Tim Cook. Our long-term effort to create accessible technology gives everyone the opportunity to be creative, connect, and do the things they love.”

“Apple embeds accessibility in everything we do,” said Sarah Herrlinger, Apple’s senior director of global accessibility policy and action. The new method supports the diverse needs of users and helps people communicate.”

It is reported that Assistive Access uses an innovative design to integrate the basic functions of the iPhone’s camera, photo, music, phone, and information App, including the customized experience of phone and Facetime, and merges information, camera, photo, and music apps into one Separate Calls App. This feature provides a unique interface with high-contrast buttons and large text labels, as well as tools to help trusted caregivers customize the experience for their care recipients.

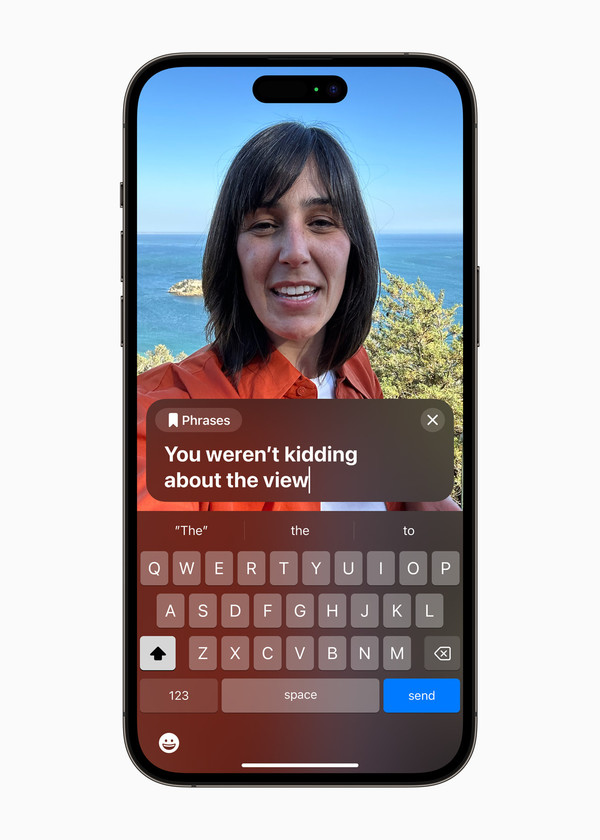

Live Speech and Personal Voice functions can bring about the realization of barrier-free voice communication. Live Speech is designed to support the millions of people around the world who have lost or progressively lost their ability to speak. With Live Speech on iPhone, iPad and Mac, users can type in what they want to say and have it read aloud during phone calls, FaceTime calls and face-to-face conversations. Users can also save frequently used phrases to express opinions quickly during lively conversations with family, friends and colleagues.

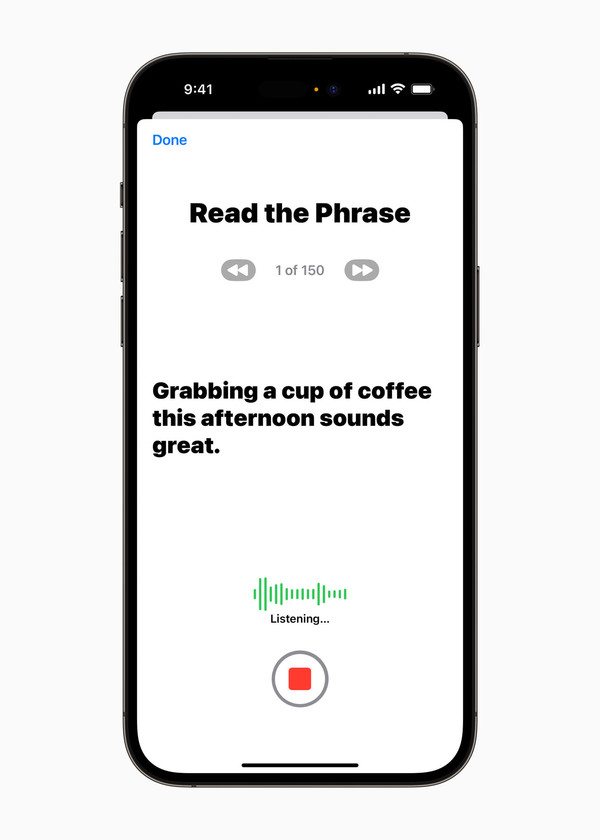

Personal Voice is a simple and safe feature that helps users who are about to face aphasia create a voice that resembles their voice. Users can use an iPhone or iPad to record 15 minutes of audio, read randomly generated text prompts, and create a Personal Voice. The language assistance feature uses on-device machine learning to keep user information private and secure, and seamlessly integrates with Live Speech, allowing users to speak through Personal Voice while communicating with loved ones.

In addition to the above-mentioned auxiliary functions for people with aphasia, Apple also introduced the Point and Speak function for blind or low-vision users through the detection mode of the amplifier. This feature helps visually impaired users understand and use entity objects with multiple text labels more easily. For example, when using household appliances such as microwave ovens, Point and Speak can combine the input content of the camera app and the lidar scanner, supplemented by device-side machine learning, and read aloud the information on each button as the user moves his finger in the appliance button area. Word. In addition, Point and Speak works with VoiceOver and works with other features of Amplifier such as person detection, door detection and image description to help visually impaired users better use other devices.

In order to bring better barrier-free operation, Apple has also optimized many aspects such as hearing aid device connection, voice control, and image adjustment of browser mobile elements. In anticipation of Global Accessibility Awareness Day, Apple is also rolling out new features, curated collections and more this week, as it invites three disability community leaders to share their lived experiences and colleagues to discuss Augmentative and Alternative Communication (AAC) apps major changes to their lives.